4 More Ways Designers Can Make It Easier For Generative AI Users to Write Prompts

How might we redesign the input boxes of generative AI tools to make it easier for users to articulate their ideal prompts?

[This is part 2 of a series on designing for generative AI prompting. You can read the first part here.]

As previously stated, many users aren’t the most thorough writers. It’s totally common to struggle to articulate our thoughts and needs in everyday life and when using generative AI. Jakob Nielsen, founder of the design organization Nielsen Norman Group, describes this as an “articulation barrier”.

So, how might we redesign the humble input box to help users confidently create more thorough, more specific, and more successful prompts?

1. Let Users Save Prompt Templates

If someone's using your tool daily — for reports, social media captions, code reviews — let them save and reuse their best prompts to save them time and repetitive typing. You might want to consider making a separate page for the user’s prompt templates so they don’t have to dig through their old chats. This can conveniently speed up their workflow.

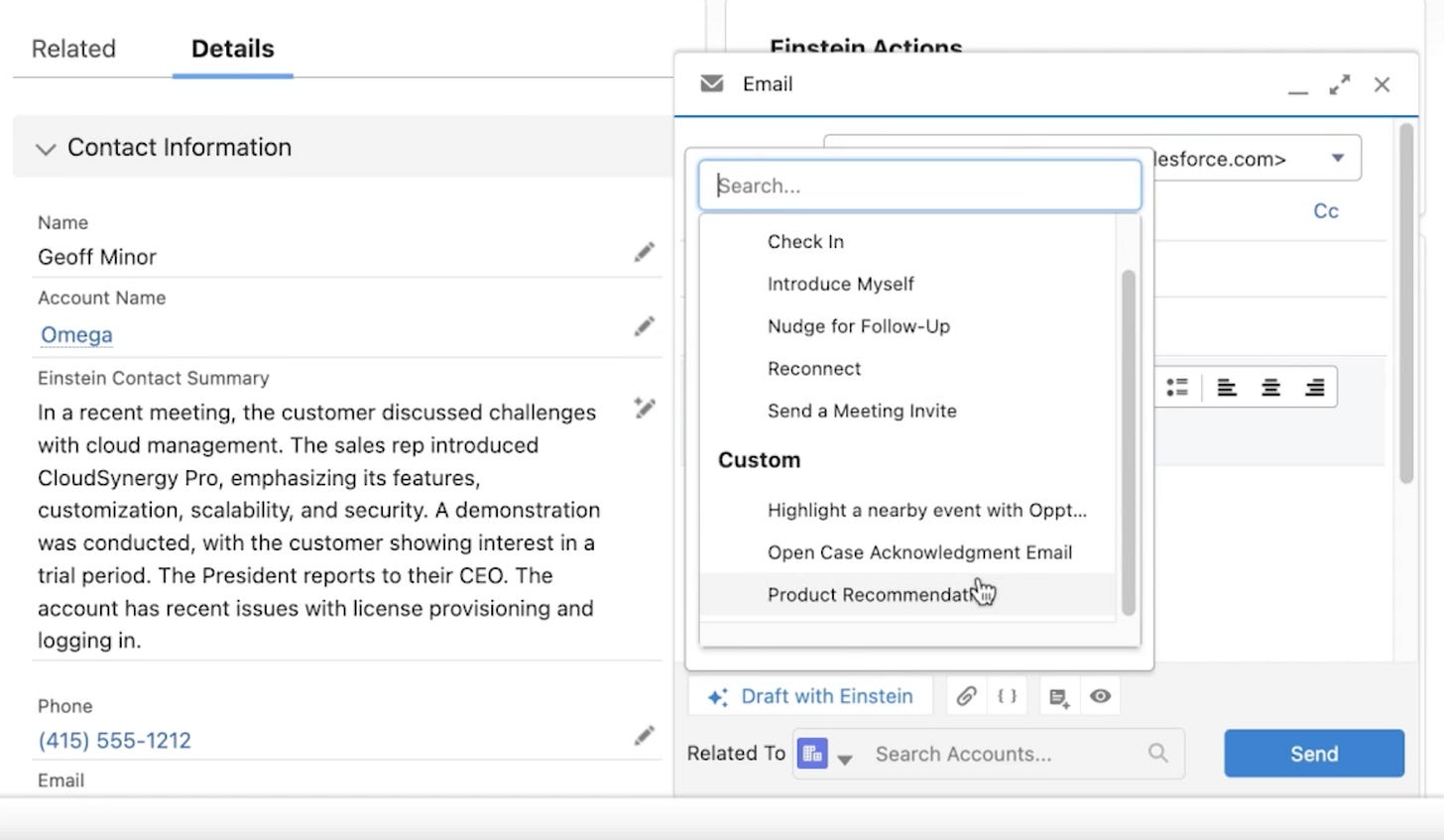

A notable B2B strategy is the CRM Salesforce’s Prompt Builder, which helps users craft a prompt library for a variety of use cases, such as emails tailored to different stages of outreach or customer segments. Users can select which premade prompt they’d like to use from dropdown menus as they use Salesforce’s AI to email customers. Selecting each template generates an email tailored to the customer’s distinct business data, such as their industry and past interactions.

This strategy is especially useful for: enterprise tools, where users often repeat similar workflows and use lengthy prompts.

This may not work so well for: casual or infrequent-use tools, where users are unlikely to use similar lengthy prompts. For multipurpose tools like ChatGPT, you can consider building a section for users to save frequently used prompts if needed.

2. Improve User Prompts Yourself

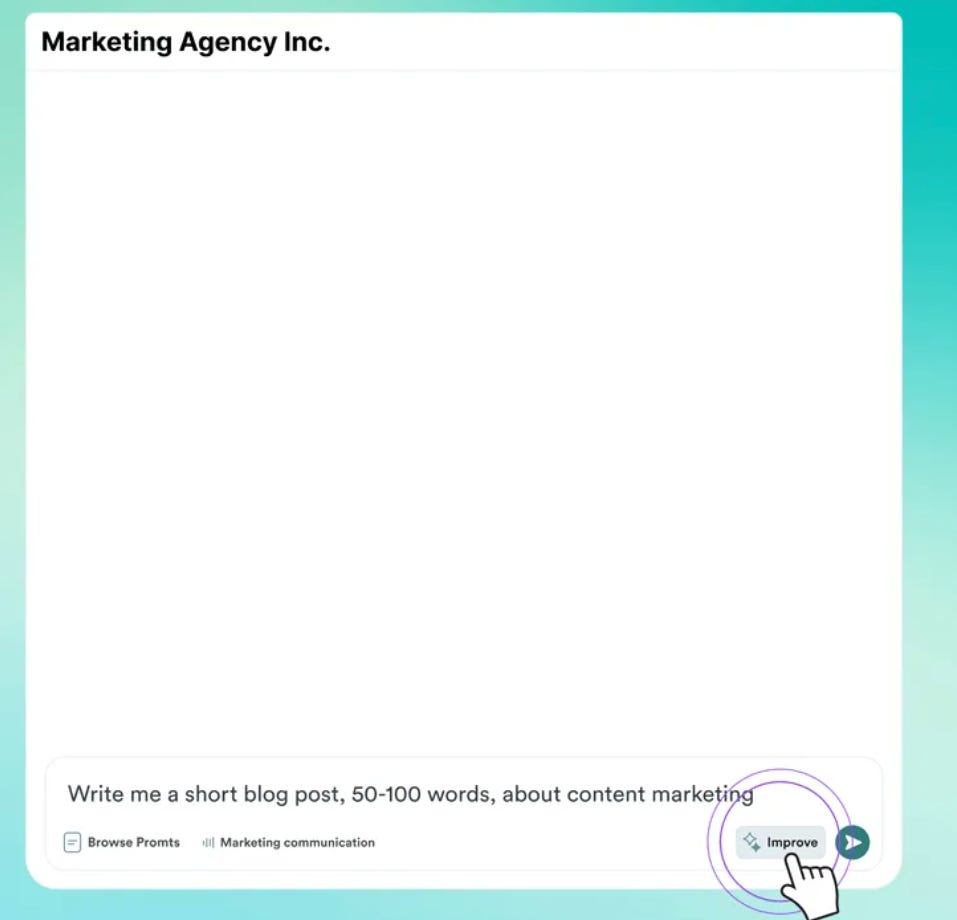

Don’t want to squeeze in any predefined fields or extra UI around your main text box? You can follow in the footsteps of Copy.ai and let the users choose whether they’d like your system to improve their prompts for them automatically, making it clearer and concise:

This way, users can discover a more well-written, thorough version of their prompts that can set an example for their future prompt writing.

This strategy is especially useful for: image generators, where users frequently get unexpected visuals due to vague phrasing. Refining prompts before submission can reduce trial-and-error. Content generators and other tools where prompts are always expected to be descriptive might benefit from this, such as wireframe generators for UX designers.

This may not work so well for: anything deeply sentimental where even suggesting the user can improve their writing may come off as insensitive, such as an AI companion like Replika where active users are often in a more emotionally vulnerable state and may just want to be heard as they are. And of course, general-purpose and conversational bots where inputs don’t always need to be descriptive. You don’t need to “improve” queries like “why is my cat making weird noises” or “tell me some facts about Mayan spirituality before I go to Tulum”.

3. Give Their Prompting Real-Time Feedback

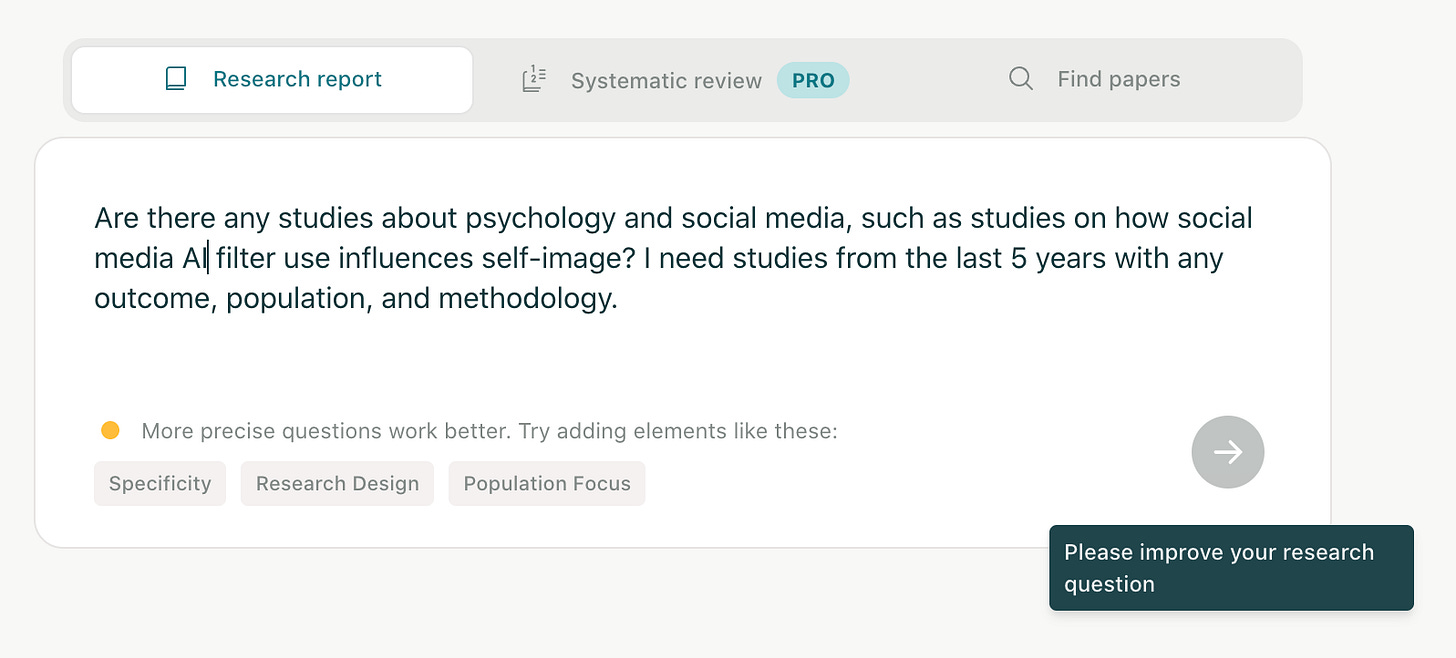

Between the rigidity of the aforementioned description input boxes and the vagueness of an “Improve” button is generative UI that suggests how the user can improve their prompt as they’re writing it. You can see that in action with research assistant tool Elicit:

This strategy is especially useful for: tools generally used in a professional capacity such as research assistants like Elicit above, where users are typically sitting down and actually have the time and headspace to refine their prompts.

This may not work so well for: again, anything deeply sentimental where even suggesting the user can improve their writing may come off as insensitive such as an AI diary. If your users usually just ask simple questions, this feedback probably be necessary. If they’re often on-the-go as I was when using Microsoft Copilot on mobile, feedback might feel overly unsolicited or impeding, even if you’re just trying to help.

4. Briefly Explain The Output

You’ve probably had at least one instance of “uh, how did the AI come up with this abomination?! What did I do?”

If your users often give vague inputs and frequently iterate, you might consider giving brief explanations of why the tool’s output looks the way it does. This shows the user how your system interpreted their prompts, helping them refine their input while providing transparency.

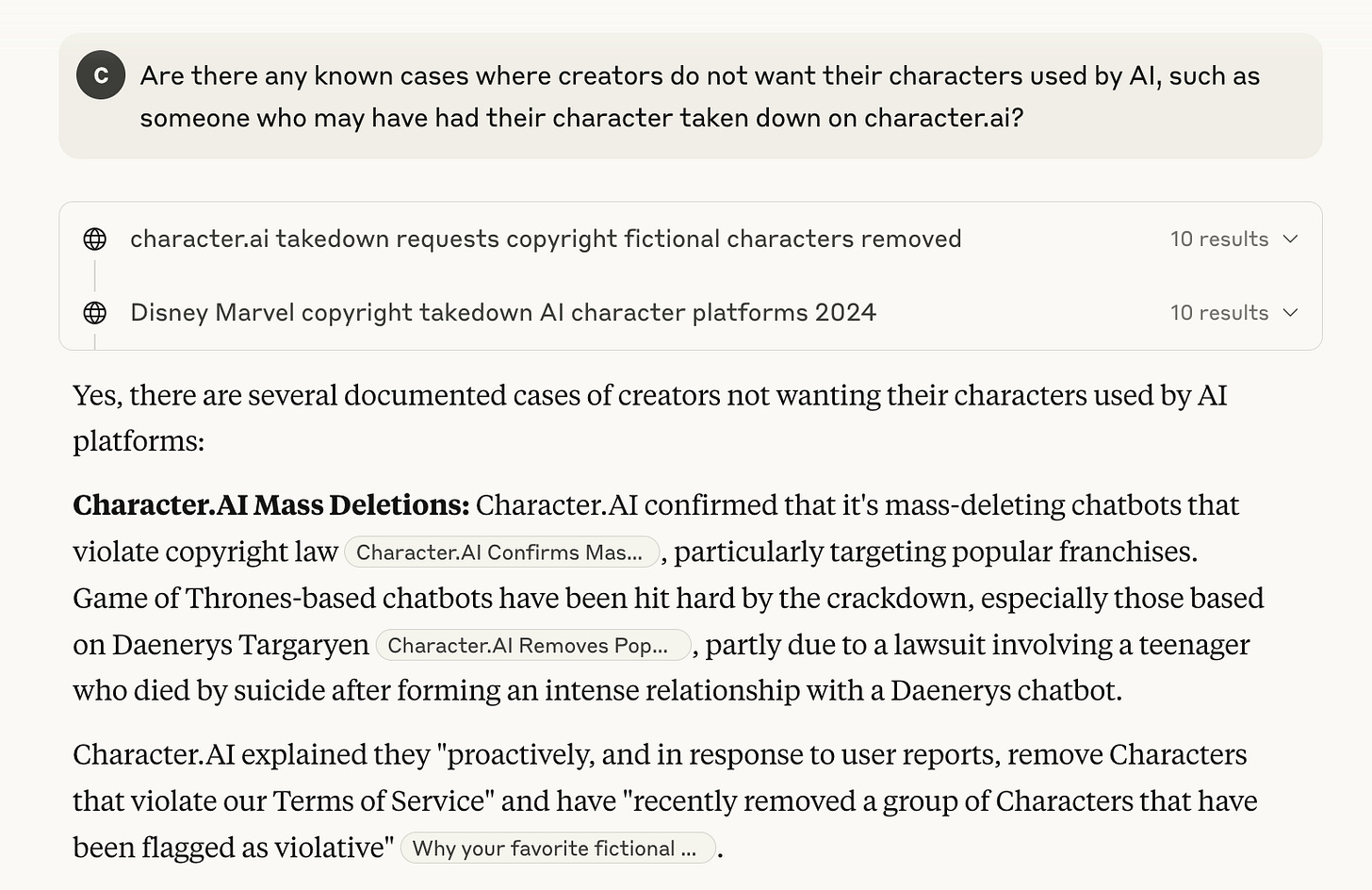

A common way bots show their reasoning is through clickable citations users can use to quickly verify their outputs, as you can see on Claude:

This strategy is especially useful for: any tools with complex outputs like the above, where understanding the behind-the-scenes enhances user trust and confidence.

This may not work so well for: simpler tools where explanations might be unnecessary.

‼️ As stated in part 1 of this series, please don’t assume that each tip above will work for your tool! What will work depends on your users’ unique needs, pain points, usage patterns, goals, and more. Just take the above as inspiration for how you might solve their problems. Perhaps your own distinctive version of one of the above might make an impact.